Neil Patel co-founded Crazy Egg in 2005. 300,000 websites use Crazy Egg to understand what’s working on their website (with features like Heatmaps, Scrollmaps, Referral Maps, and User Recordings), fix what isn’t (with a WYSIWYG Editor), and test new ideas (with a robust A/B Testing tool).

There’s a joke in the marketing world that A/B testing actually stands for “Always Be Testing.” It’s a good reminder that you can’t get stellar results unless you can compare one strategy to another, and A/B testing examples can help you visualize the possibilities.

I’ve run thousands of A/B tests over the years, each designed to help me hone in on the best copy, design, and other elements to make a marketing campaign truly effective.

I hope you’re doing the same thing. If you’re not, it’s time to start. A/B tests can reveal weaknesses in your marketing strategy, but they can also show you what you’re doing right and confirm your hypotheses.

Without data, you won’t know how effective your marketing assets truly are.

To refresh your memory — or introduce you to the concept — I’m going to explain A/B testing and its importance. Then, we’ll dive into a few A/B testing examples that will inspire you to create your own tests.

To Recap: What Exactly Is an A/B Test?

An A/B test is a comparison between two versions of the same marketing asset, such as a web page or email, that you expose to equal halves of your audience. Based on conversion rates or other metrics, you can decide which one performs best.

But it doesn’t stop there. You don’t want to settle for one A/B test. That will give you very limited data. Instead, you want to keep testing to learn more about your audience and find new ways to convert prospects into leads and leads into customers.

Remember: Always Be Testing.

For instance, you might start with the call to action on a landing page. You A/B test variations in the button color or the CTA copy. Once you’ve refined your CTA, you move on to the headline. Change the structure, incorporate different verbs and adjectives, or change the font style and size.

You might also have to test the same things multiple times. As your audience evolves and your business grows, you’ll discover that you need to meet new needs — both for the company and for your audience. It’s an ever-evolving process that can ultimately have a huge impact on your bottom line.

An A/B test runs until you have enough data to make a solid decision. This depends, of course, on the number of people who see one variation or the other. You can run multiple A/B tests at the same time, but stick to one variable for each.

In other words, if you’re testing the headline on a landing page, you might test the subject line for your latest email marketing campaign. Changing just one variable ensures that you know what had an impact on your audience’s responses.

When (And How) Do You Know You Need to Run A/B Tests?

The simple answer to this question is that you should always run A/B tests. If you have a website, a business, and an audience, testing right away gives you an advantage over the competition.

Realistically, though, you’ll get more accurate results with an established business than a brand new one. This is because an established business has already begun to generate targeted traffic and qualified leads, so the results will be pretty consistent with the target market.

This doesn’t mean A/B testing is useless for a new business. It just means that you might get less accurate results.

The best time to run A/B tests is when you want to achieve a goal. For instance, if you’re not satisfied with your conversion rate on your homepage, A/B test some changes to the copy, images, and other elements. Find new ways to persuade people to follow through on the next step in the conversion process.

3 of The Best A/B Testing Examples to Inspire You (Case Studies)

Now, it’s time for the proof. Let’s look at three A/B testing examples so you can see how the process works in action. I’ll describe each test, including the goal, the result, and the reason behind the test’s success.

Example 1: WallMonkeys

If you’re not familiar with WallMonkeys, it’s a company that sells incredibly diverse wall decals for homes and businesses.

The company used Crazy Egg to generate user behavior reports and to run A/B tests. As you’ll see below, the results were pretty incredible.

The goal

WallMonkeys wanted to optimize its homepage for clicks and conversions. It started with its original homepage, which featured a stock-style image with a headline overlay.

There was nothing wrong with the original homepage. The image was attractive and not too distracting, and the headline and CTA seemed to work well with the company’s goals.

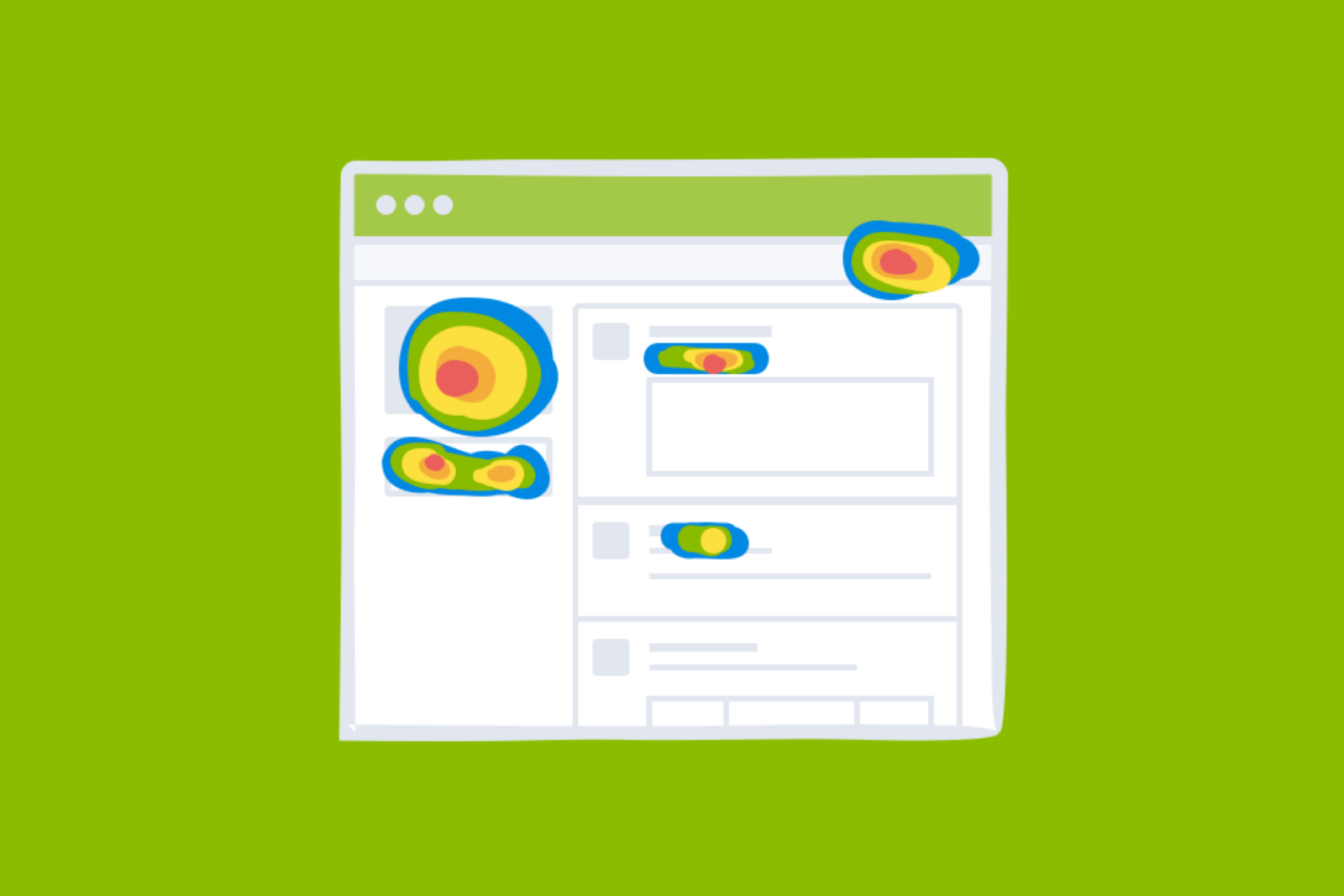

First, WallMonkeys ran Crazy Egg Heatmaps to see how users were navigating the homepage. Heatmaps and Scrollmaps allow you to decide where you should focus your energy. If you see lots of clicking or scrolling activity, you know people are drawn to those places on your website.

As you can see, there was lots of activity on the headline, CTA, logo, and search and navigation bar.

After generating the user behavior reports. WallMonkeys decided to run an A/B test. The company exchanged the stock-style image with a more whimsical alternative that would show visitors the opportunities they could enjoy with WallMonkeys products.

Conversion rates for the new design versus the control were 27 percent higher.

However, WallMonkeys wanted to keep up the testing. For the next test, the business replaced its slider with a prominent search bar. The idea was that customers would be more drawn to searching for items in which they were specifically interested.

Result

The second A/B testing example resulted in a conversion rate increase of 550 percent. Those are incredible results, even for a company as popular as WallMonkeys. And by not stopping at the first test, the company enjoyed immense profit potential as well as a better user experience for its visitors.

Why it works

Before you start an A/B test, you perform a hypothesis — ideally based on data. For instance, WallMonkeys used Heatmaps and Scrollmaps to identify areas of visitor activity, then used that information to make a guess about an image change that might lead to more conversions.

They were right. The initial 27 percent increase might seem small in comparison to the second test, but it’s still significant. Anyone who doesn’t want 27 percent more conversions, raise your hand.

Just because one A/B test yields fruitful rewards doesn’t mean that you can’t do better. WallMonkeys realized that, so they launched another test. It proved even more successful than the first.

When you’re dogged about testing website elements that matter, you can produce startling results.

Example 2: Electronic Arts

When Electronic Arts, a successful media company, released a new version of one of its most popular games, the company wanted to get it right. The homepage for SimCity 5, a simulation game that allows players to construct and run their own cities, would undoubtedly do well in terms of sales. Electronic Arts wanted to capitalize on its popularity.

According to HubSpot, Electronic Arts relied on A/B testing to get its sales page for SimCity 5 just right.

The goal

The desire behind this A/B testing example revolved around improving sales. Electronics Arts wanted to maximize revenue from the game immediately upon its release as well as through pre-sale efforts.

These days, people can buy and download games immediately. The digital revolution has made those plastic-encased CDs nearly obsolete, especially since companies like Electronic Arts promote digital downloads. It’s less expensive for the company and more convenient for the consumer.

However, sometimes the smallest things can influence conversions. Electronic Arts wanted to A/B test different versions of its sales page to identify how it could increase sales exponentially.

Result

I’m highlighting this particular A/B testing example because it shows how hypotheses and conventional wisdom can blow up in our faces. For instance, most marketers assume that advertising an incentive will result in increased sales. That’s often the case, but not this time.

The control version of the pre-order page offered 20 percent off a future purchase for anyone who bought SimCity 5.

The variation eliminated the pre-order incentive.

As it turns out, the variation performed more than 40 percent better than the control. Avid fans of SimCity 5 weren’t interested in an incentive. They just wanted to buy the game. As a result of the A/B test, half of the game’s sales were digital.

Why it works

The A/B test for Electronic Arts revealed important information about the game’s audience. Many people who play a popular game like SimCity don’t play any other games. They like this particular franchise. Consequently, the 20-percent-off offer didn’t resonate with them.

If you make assumptions about your target audience, you’ll eventually get it wrong. Human behavior is difficult to understand even without hard data, so you need A/B testing to generate data on which you can rely. If you were surprised by the result of this A/B test, you might want to check out others that we highlighted in a recent marketing conference recap.

Example 3: Humana

Humana, an insurance carrier, created a fairly straightforward A/B test with huge results. The company had a banner with a simple headline and CTA as well as an image. Through A/B testing, the company realized that it needed to change a couple things to make the banner more effective. This is the second of two A/B testing examples that reveals one test just isn’t enough.

The goal

According to Design For Founders, Humana wanted to increase its click-through rate on the above-described banner. It looked good as-is, but the company suspected it could improve CTR by making simple changes.

They were right.

The initial banner had a lot of text. There was a number in the headline, which often leads to better conversions, and a couple lines with a bulleted list. That’s pretty standard, but it wasn’t giving Humana the results they wanted.

The second variation reduced the copy significantly. Additionally, the CTA changed from “Shop Medicare Plans” to “Get Started Now.” A couple other changes, including the image and color scheme, rounded out the differences between the control and eventual winner.

Result

Simply cleaning up the copy and changing the picture led to a 433 percent increase in CTR. After changing the CTA text, the company experienced a further 192 percent boost.

This is another example of incredible results from a fairly simple test. Humana wanted to increase CTR and did so admirably by slimming down the copy and changing a few aesthetic details.

Why it works

Simplicity often rules when it comes to marketing. When you own a business, you want to talk about all its amazing features, benefits, and other qualities, but that’s not what consumers want.

They’re looking for the path of least resistance. This A/B testing example proves that people often respond to slimmed-down copy and more straightforward CTAs.

Start Your Own A/B Testing Now

It’s easy to A/B test your own website, email campaign, or other marketing efforts. The more effort you put into your tests, the better the results.

Start with pages on your website that get the most traffic or that contribute most to conversions. If an old blog post hardly gets any views anymore, you don’t want to waste your energy.

Focus on your home page, landing pages, sales pages, product pages, and similar parts of your site. Identify critical elements that might contribute to conversions.

As mentioned above, you can run A/B tests on multiple pages at the same time. You just want to make sure you’re only testing one element on a single page. After that test ends, you can test something else.

How Crazy Egg’s A/B Testing Tool Can Help You Boost Results

Crazy Egg offers several A/B testing benefits that other tools don’t offer. For one thing, it’s super fast and simple to set up. You can have your first A/B test up and running in just a few minutes. Other tools require hours of work.

You don’t need any coding skills to conduct tests like the A/B testing examples above. The software does the heavy lifting for you. Additionally, you don’t have to worry about checking every five minutes to know when to shut down the losing variant; Crazy Egg’s multi-armed bandit testing algorithm automatically funnels the majority of traffic to the winner for you.

Along with generating user behavior reports to help you decide what to test, you can test in a continuous loop so there’s no downtime in your quest for marketing success.

Conclusion

The A/B testing examples illustrated above give you an idea of the results you can achieve through testing. If you want to boost conversions and increase sales, you need to make data-driven decisions.

Guesswork puts you at a disadvantage, especially since your competitors are probably conducting their own tests. If you know a specific element on your page contributes heavily to conversions, you can trust it to keep working for you.

Plus, you get to see the results of your test in black-and-white. It’s comforting to know that you’re not just throwing headlines and CTAs at your audience, but that you know what works and how to communicate with your target customers.